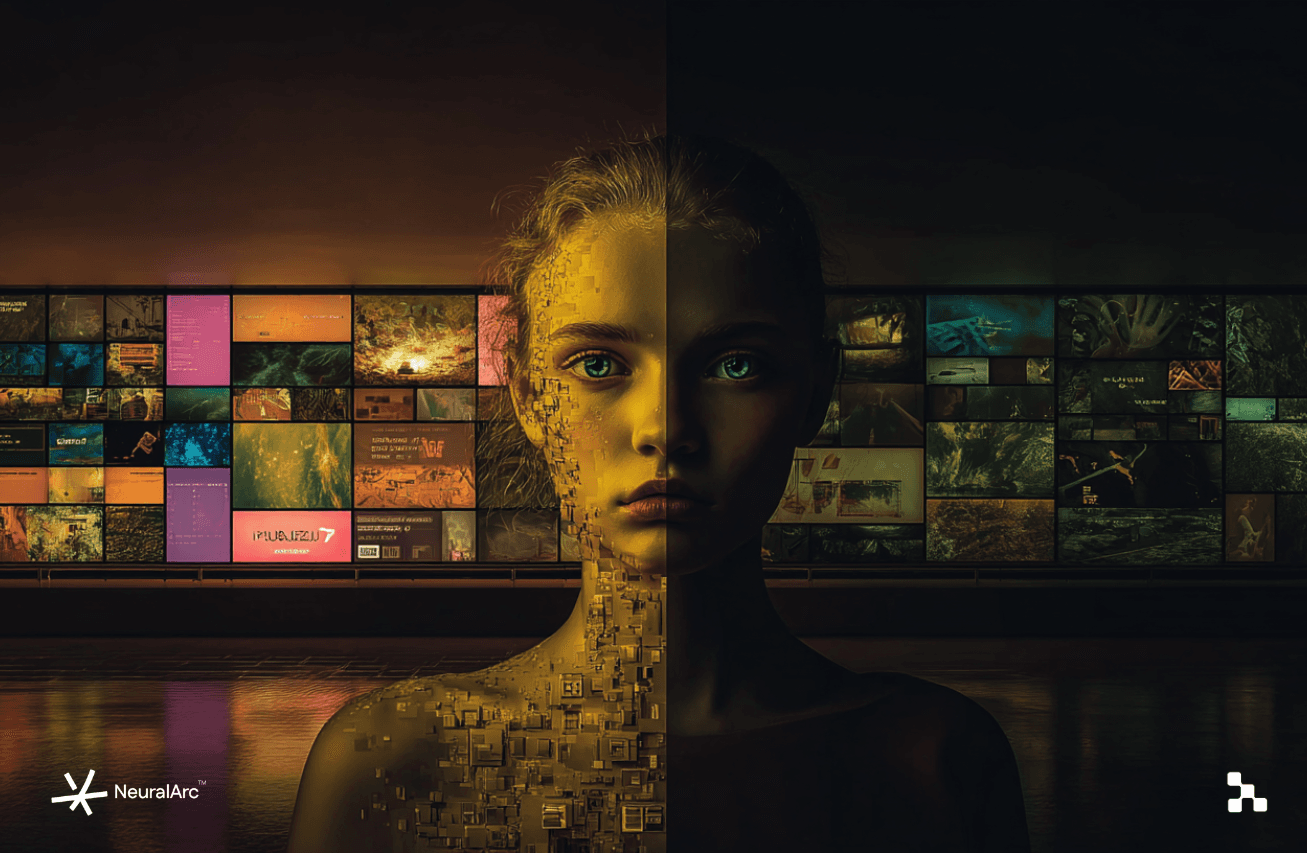

Synthetic Media & the Ethics of AI-Generated Content

The world is rapidly changing, driven by the relentless advancement of artificial intelligence. One of the most fascinating, and potentially disruptive, developments is the rise of synthetic media. This encompasses a wide range of AI-generated content, from photorealistic deepfakes that can convincingly mimic real people to AI-composed music and stunning works of art created by algorithms. While these technologies offer incredible potential for creativity and innovation, they also raise serious ethical questions that demand our attention.

Understanding Synthetic Media and Its Potential

Synthetic media refers to any content – text, images, audio, or video – that has been wholly or partially created by artificial intelligence. The complexity and sophistication of this content are increasing exponentially. We're moving beyond simple text generation to AI models that can convincingly generate realistic human faces, mimic voices, and even create entire virtual worlds. This has profound implications across various sectors.

Consider the entertainment industry, where AI can be used to create special effects, personalize video game experiences, or even generate entire film scripts. In education, AI-powered tools could create personalized learning experiences tailored to each student's individual needs. However, the same technologies that empower these advancements can also be used to spread misinformation and manipulate public opinion. This dual-use nature is at the heart of the ethical dilemma surrounding synthetic media.

Applications and Opportunities

- Creative Industries: AI tools can assist artists, musicians, and filmmakers in generating new ideas and creating innovative content.

- Education: Personalized learning experiences and AI-generated educational materials can enhance student engagement and comprehension.

- Marketing and Advertising: AI can create targeted advertising campaigns and personalize customer experiences.

- Accessibility: AI-generated content can make information more accessible to people with disabilities, such as creating audio descriptions for videos.

The Ethical Minefield: Risks and Challenges

The potential for misuse of synthetic media is perhaps the most pressing ethical concern. Deepfakes, for example, can be used to create fake videos of politicians making false statements, potentially influencing elections or inciting violence. AI-generated propaganda can spread disinformation and erode public trust in legitimate news sources. The ability to create realistic fake content at scale presents a significant challenge to our ability to discern truth from falsehood.

Beyond misinformation, synthetic media also raises concerns about privacy and consent. AI models are often trained on vast amounts of data, including personal information. This raises questions about how this data is collected, used, and protected. Furthermore, individuals may not have control over how their likeness or voice is used in AI-generated content. The ethical implications of creating synthetic versions of real people without their knowledge or consent are significant.

Key Ethical Concerns

- Misinformation and Manipulation: The use of deepfakes and AI-generated propaganda to spread false information and manipulate public opinion.

- Privacy and Consent: The collection and use of personal data to train AI models, and the creation of synthetic versions of real people without their knowledge or consent.

- Job Displacement: The potential for AI-generated content to automate creative tasks, leading to job losses in various industries.

- Bias and Discrimination: The risk that AI models will perpetuate and amplify existing biases, leading to unfair or discriminatory outcomes.

Navigating the Future: Towards Responsible AI Development

Addressing the ethical challenges of synthetic media requires a multi-faceted approach involving technologists, policymakers, and the public. Developing robust detection technologies to identify and flag AI-generated content is crucial. Education and media literacy programs can help individuals critically evaluate information and distinguish between real and fake content. Clear legal and regulatory frameworks are needed to address the misuse of synthetic media, including issues of liability, copyright, and data privacy.

Transparency is also essential. AI developers should be transparent about how their models are trained and how they work. Content creators should disclose when AI has been used to generate or modify content. By fostering greater transparency and accountability, we can build trust in AI systems and mitigate the risks associated with synthetic media. Ultimately, the responsible development and deployment of synthetic media require a commitment to ethical principles and a willingness to engage in ongoing dialogue about the implications of these powerful technologies. The future depends on our ability to harness the potential of AI while safeguarding truth and protecting the public interest.

The key is finding a balance. We must not stifle innovation but prioritize ethical considerations. Creating synthetic media comes with immense power, and power comes with immense responsibility. By embracing thoughtful regulation, promoting transparency, and empowering citizens with media literacy, we can ensure that synthetic media becomes a tool for good, enhancing creativity and knowledge without sacrificing trust and truth.